Contrastive Predictive Coding for Representation Learning

An exploration of Noise Contrastive Estimation (NCE) and how it enables Contrastive Predictive Coding (CPC) for learning useful representations from high-dimensional sequential data like speech.

What is Noise Contrastive Estimation (NCE)?

Let be a random vector with an unknown probability density . We wish to model the unknown data density with a parameterised family of functions , where is a vector of parameters. This assumes that is within in our chosen parameterised family. The question we are concerned with is how do we select the such that .

Since the density has to be normalised (integrate to 1), we can redefine the p.d.f in a way that means this constraint is always satisfied: where and specifies a functional form of the density that doesn’t need to integrate to 1.

The issue is that computing the normalising coefficient is generally intractable. NCE is a method to optimise the unnormalised model and the normalisation parameter by maximising the same objective. The normalisation constant is treated as another parameter of the model. I.e., .

Let be the observed data set consisting of T observations of the data x. Let be an artificially generated dataset of noise with distribution . The objective function for theta is:

where . This can be seen as the log-likelihood of a logistic regression model which discriminates the data X from the noise Y.

NCE Connection to Supervised Learning

Let be the union of two sets X and Y. Each has a class label, , where if , and if , . For logistic regression, the posterior probabilities of the classes given the data are estimated. We don’t know the p.d.f of the data, therefore the class-conditional probability is modeled . Then we have:

Basic Idea: Estimate the parameters by learning to discriminate between data x and some artificially generated noise y.

Theorem 1: Optimising the proposed objective attains maximum when . This optima is global if the noise density is selected such that it is non-zero whenever is non-zero.

Key benefit of this method is that we can optimise it without knowing the normalising coefficient.

Representation Learning with Contrastive Predictive Coding

The objective is to learn representations that encode information shared between different parts of a high-dimensional signal (e.g. speaker information) while discarding low-level information and noise that is more local to each distinct region.

What is Contrastive Predictive Coding?

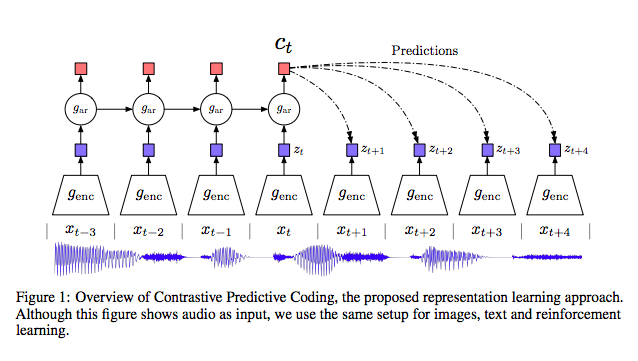

An encoder maps, maps an input sequence of observations to a sequence of latent representations , potentially the latent representation has a lower temporal resolution.

An autoregressive model summarises all , all the previous z’s in time, producing a context latent space . A figure of CPC Structure is given below (Figure 1 from the CPC paper [2]):

We do not want to predict future observations directly with a generative model , this is a difficult and expensive task. Moreover, the objective is representation learning, and hence learning a generative model is unnecessary. NCE provides a framework to build for solving this problem. We instead model a density ratio that preserves the mutual information between and .

Since is a density ratio, it does not need to be normalised. is implemented, in this case, as a log-bilinear model:

I note that I found the order that this was presented to be a little confusing. In practice, the above formulation for is chosen, and then the InfoNCE objective described below is at optimum when is proportional to the above density ratio.

Using a density ratio and inferring with an encoder means we don’t have to model the high dimensional distribution . We cannot evaluate or directly. However, samples can be taken from these distributions allowing the use of NCE based objectives.

Either or could be used for downstream tasks. could be used if context from the past is useful, and if no context is needed, then could be a better representation. Lastly, if the downstream task requires a representation of the whole sequence, then one can pool the representations from either or over all locations.

What is InfoNCE?

Given a set of N random samples containing one positive sample from and N-1 negative samples from the ‘proposal’ distribution we optimise:

In other words, the categorical cross-entropy of classifying the positive sample correctly, is the prediction model.

A distinction between NCE and InfoNCE: In NCE, we consider binary classification between the true distribution and noise distribution. Here, we are optimising a multiclass classification problem.

Implementation

As part of my research into CPC, I thought it to be a good idea to attempt implementing the proposed algorithm as no sample implementation is provided with the paper, and I was not able to find many online implementations. I was interested in a speech application and decided on learning a representation speech signals from the “SpeechMNIST” dataset (people speaking the digits zero through nine from the Google Speech Commands Dataset). The implementation can be found as a GitHub Gist.

Disclaimer: I am not 100% confident this implementation is entirely correct.

References

-

Gutmann, M. and Hyvarinen, A., 2010, March. “Noise-contrastive estimation: A new estimation principle for unnormalized statistical models.” In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics (pp. 297-304).

-

Oord, A.V.D., Li, Y. and Vinyals, O., 2018. “Representation learning with contrastive predictive coding.” arXiv preprint arXiv:1807.03748.