On the Issues with Transpose Convolution and GAN Based Synthesis

In this post, we discuss the issue of checkerboard artefacts introduced by transpose convolution layers as well as proposed solutions to this problem. Experiments on audio synthesis are also given, further motivating these methods.

It is well known that for Generative Adversarial Networks (GANs) [1] trained in the image-domain, using generators consisting of transposed convolution layers can cause artefacts in both the high and low frequencies. These artefacts present as a checkerboard effect in the generated images. “Deconvolution and Checkerboard Artifacts” [2] is a superbly written article on Distil.pub, which discusses this. I will first summarise [2], to motivate the issue. Then, we will look at “checkerboarding” in the audio domain, since [2] focuses on images. Lastly, I will discuss the checkerboarding effect in relation to my research on speech synthesis with Generative Adversarial Networks.

Summary of [2]

In general, the generator function of a GAN takes as input a low-dimensional noise vector, that it must upscale into an image. Typically, there is a big discrepancy between the latent dimensionality and the data dimensionality (e.g., 64 or 128 latent features and $$ in the case of CelebA). Hence, the generator function must fill in a lot of information.

Transpose convolution (or de-convolution) is a standard method used to facilitate this up-scaling of dimensionality. However, the issue occurs when some regions in the upscaled image get more attention than others. A nice intuition building argument from [2] is that every pixel in the image is used to “paint” a region in a larger image, if these regions overlap unevenly then the operation is “putting more paint in some places than others”. More specifically, this issue occurs if the kernel size is not divisible by the stride, [2] has some very nice illustrations.

This issue is generally compounded (rather than reduced) when stacking transpose convolutions. Moreover, when stacking transpose convolutions, this can cause artefacts both in the high and low-frequency information.

Intuitively, at least in the case of GAN, this should make it very easy for the discriminator/critic to detect which images are fake. Hence, the generator should learn to avoid making this checkerboarding effect. However, in practice, it is difficult for the model not to learn this checkerboarding effect. Interestingly, even models with even overlap (i.e., kernel size is divisible by stride) often learn these same artefacts. Hence, a different approach to up-sampling is needed.

The authors propose separating the up-sampling from the convolution operation. Instead, one would first up-sample an image using some form of interpolation (nearest-neighbour, or bilinear) and then apply a standard convolutional layer. They then provide figures demonstrating that using these resize convolutions removes this checkerboarding effect.

[2] discusses more than what is summarised here and I would recommend reading it in its entirety.

“Checkerboarding” in Audio

The paper “Adversarial Audio Synthesis” [5], in part, looks at the checkerboarding issue with transpose convolutions specifically within the context of audio synthesis in GANs. The authors claim that in the image domain, periodic patterns are less common, and hence, the discriminator can easily reject images with checkerboard artefacts as fake. While this might be true, in [2], the authors discuss how this checkerboarding effect is difficult to un-learn. Therefore, even if the discriminator can easily reject images with checkerboard artefacts, this doesn’t mean that the generator can avoid producing these distortions.

The authors observe that for audio, this checkerboarding effect presents as “pitched noise”, that can overlap with frequencies in the real data, making it difficult for the critic to detect audio with this distortion. However, the authors claim that these artefacts always occur at the same phase, meaning that the discriminator can learn a policy to reject generated audio.

The phase shuffle operation randomly shifts the phase of the activations in each layer of the activations. Thus, making the critic’s job more difficult as it must be invariant to phase. However, as mentioned before, [2] discusses how this checkerboarding behaviour is inherent to transpose convolutions and is difficult for the model to learn to avoid. Hence, phase shuffle prevents learning a trivial reject policy in the critic, but it does not necessarily mean the checkerboarding effect can be avoided.

In the recent paper “High fidelity speech synthesis with adversarial networks” [6], the authors propose GAN-TTS, that can generate high-quality speech from mel-spectrogram conditioning. While we do not go into detail on their model, it should be noted that the authors use resize convolutions. Therefore, further motivating the use of resizing convolutions.

The Problem at Hand

I am interested in using a GAN for speech synthesis with the end goal of application to generative speech enhancement. However, for illustration, we will consider the following simplified setup.

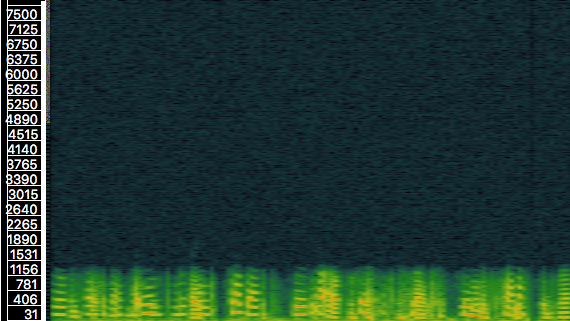

Let X be a database of windows of speech, 2048 samples (16k signals). Our objective is to learn a generative model of 2048 sample speech windows. As an additional simplifying step, we will consider low-pass filtered signals, attenuating frequencies above 1500Hz. For reference, the spectrogram representation of 64 low-pass filtered speech windows joined together is given in the following figure.

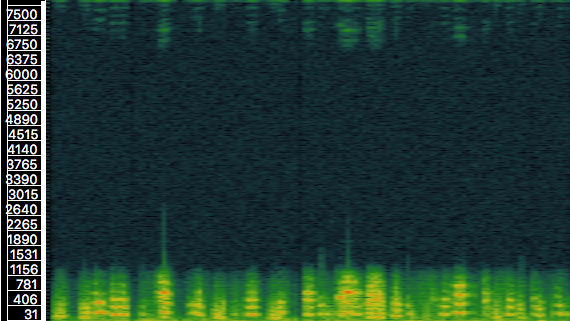

For the generative model, we use the Wasserstein GAN [3], with gradient penalty [4]. The critic function used convolutional layers, and the initial generator setup consisted of transpose convolutions, where the latent dimensionality was 64. This produced the following result, this time the spectrogram for 64 generated windows concatenated together.

The reader can observe harmonics occurring in the high frequencies of the speech signal, these are not present in the data, and hence, are an artefact of the model. These artefacts can be explained by the checkerboarding effect that occurs in transpose convolutions. I note that in the experiment above, the kernel size and stride have been selected so that the stride divides the kernel size.

In the following experiments, we instead use the resize convolution layer in place of the transpose convolution. We use the following TensorFlow implementation of resize-convolution, with nearest neighbour interpolation, as this was reported to work better in [2]:

def resize_convolution(x, kernel_size=22, filters=1, padding='same', activation=None, upscale_factor=2):

# x has shape [bs, length, features]

# we want to make it [bs, length, 1, features] so we can use 'tf.image.resize'

shape = x.shape

x = tf.reshape(x, (x.shape[0], x.shape[1], 1, x.shape[2]))

x_up = tf.image.resize(x, [upscale_factor*shape[1], 1], method=tf.image.ResizeMethod.NEAREST_NEIGHBOR)

x_up = tf.reshape(x_up, (-1, upscale_factor*shape[1], shape[2]))

# x_up is then the upscaled vector

# with shape [bs, upscale_factor * length, features]

# Apply Convolution and return result

x_conv = tf.layers.conv1d(x_up, strides=1, kernel_size=kernel_size, filters=filters, activation=activation, padding=padding)

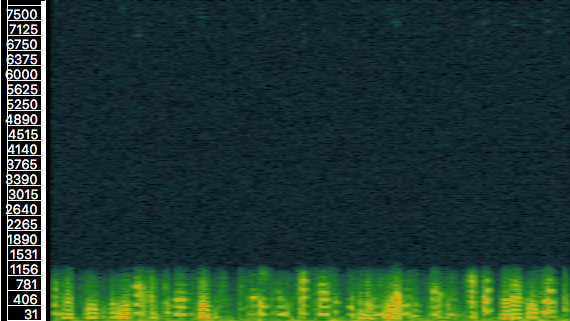

return x_convUsing this implementation of resize-convolution in place of transpose convolutions in WGAN’s generator, we are able to achieve the following, significantly improved, results:

There is a small amount of distortion in the high frequencies. However, not nearly as much as WGAN with a transpose convolution generator function.

Conclusions

I found [2] to provide an interesting and convincing argument as to why this checkerboarding problem should be considered. The speech synthesis experiments in this post further convinced me of the benefits of using alternatives to transpose convolutions. However, it is likely that there is still room for improvement, even when using resize convolutions, we still observe some artefacts in the high frequencies. Perhaps the remaining artefacts are the result of using strided convolutions in the critic function, [2] discusses how back-propagating gradients through strided convolutional layers can cause artefacts in the gradients.

References

-

Goodfellow, Ian, et al. “Generative adversarial nets.” Advances in neural information processing systems. 2014.

-

Odena, et al., “Deconvolution and Checkerboard Artifacts”, Distill, 2016. http://doi.org/10.23915/distill.00003

-

Arjovsky, Martin, Soumith Chintala, and Léon Bottou. “Wasserstein GAN.” arXiv preprint arXiv:1701.07875 (2017).

-

Gulrajani, Ishaan, et al. “Improved training of Wasserstein GANs.” Advances in neural information processing systems. 2017.

-

Donahue, Chris, Julian McAuley, and Miller Puckette. “Adversarial audio synthesis.” International Conference on Learning Representations (2019).

-

Binkowski, Mikolaj, et al. “High fidelity speech synthesis with adversarial networks.” International Conference on Learning Representations (2020).